I recently read an interesting take on Why Intel can’t kill x86. Even video game console manufacturers are encountering the same issues. The solution I’m about to propose must have been proposed a hundred times over, but I think now is a good time to revisit it. Virtualization and emulation. Let me explain.

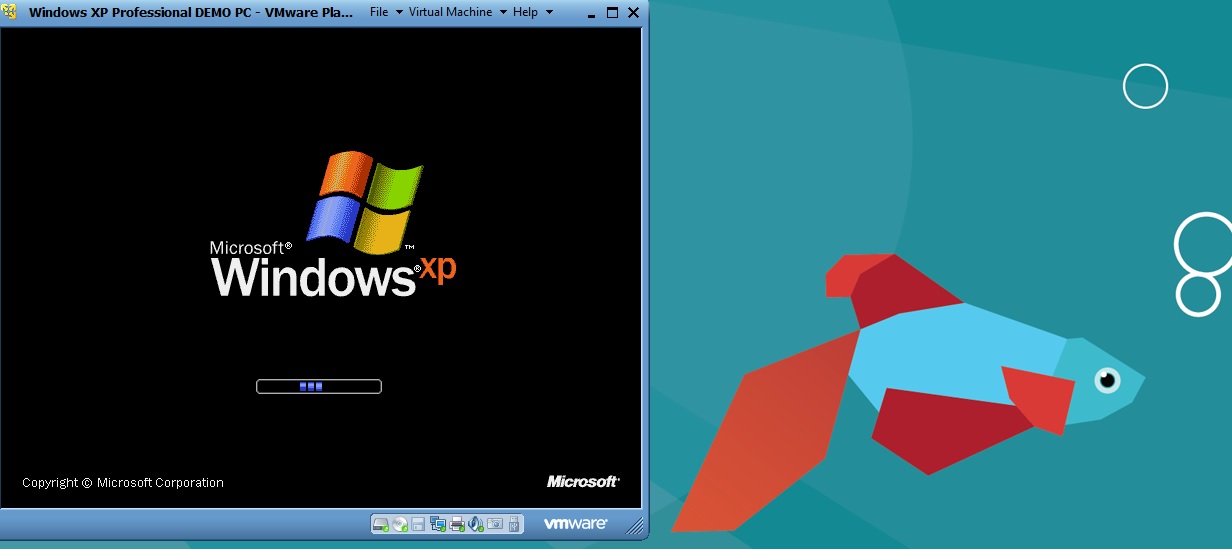

Virtualization is a very abstract process and can be hard to understand. It allows you to “virtually” run another PC within your own. In the screenshot above, I am booting a Windows XP computer within Windows 8 PC. In order to do this, the “guest” or Virtual PC must be configured to be weaker than the “host” PC. The host PC still needs enough resources (like memory, hard drive space, and CPU cycles) to keep running, but can dedicate as much or as little to the guest virtual PC. Emulation is a similar process which can sometimes require a little more processing power, because you aren’t just “hosting” a second machine within yours, you are emulating an entire second PC by mimicking hardware and software of the previous environment.

Gamers have been “emulating” for years. Software has been created to emulate many of the previous generations of consoles on newer consoles. This is possible because with each generation of console that passes, they get more and more powerful. For example, the original Nintendo Entertainment System from 1983 ran at a clock-speed of less than 2Mhz. The Super Nintendo doubled that at just over 3.5Mhz. For a more modern comparison, the Wii U has a Graphics Processing Unit which, alone, is clocked at over 500Mhz.

As things grow in speed, it seems natural that they should be able to do anything the older systems could do. The hang up has been that we have stalled out on increasing speed. Newer, faster processors suck up too much energy and get too hot. The Pentium 4, once upon a time, broke the 4Ghz barrier. Most CPUs today are still clocked at half of that speed, but they have multiple cores, allowing them to literally multi-task (rather than just performing thousands or millions of tasks per second, multi-core processors literally do more than one thing at a time, rather than just a series of things in rapid succession).

So if we have all of this processing power, why can’t we break away from old traditions? Moving to 64-bit has been a hassle, and moving beyond that is going to take a major leap. The migration from 16-bit to 32-bit was only difficult for government agencies and large corporations, but most of Americans were able to make the 32-bit jump without even realizing it, because they didn’t need applications the same way they do now. If you move to an entirely new architecture, away from the “x86” architecture we have been using for decades, you need to be able to code for that platform. Programmers will need to develop to take advantage and pass their instructions through the computer in a way that the computer itself will know what to do with them. This will require everyone to learn new programming languages, and it will take years for companies to bring their software over to the new platform. Nobody wants to make that investment in time or financial resources, and nobody wants to wait for the apps to come to a new platform, so chipmakers are forced to avoid moving to a new platform.

The only solution to all of this mess we have put ourselves in, is via emulation. You need to be able to build a “wrapper” of sorts, for any application, and get it to run within an emulated environment, on top of your new architecture. Every single application you use would need to emulate the underlying environment it previously lived in. Which means, in order to run multiple programs, you’re going to need your newer, more modern architecture, to be substantially stronger and faster than the old environment. As newer and more powerful architectures are developed, there is promise, but nothing has been so many leaps and bounds over the previous technology in a way that we could package it and put it in the home, and still be able to run applications we’re used to.

To make the jump, we’re going to need to re-think a lot of things we’ve considered “the norm” for decades. Desktop PCs, the shapes of the tower under your desk, the cards we plug in to their insides… it’s time for some “out of the box” thinking to take us in a new direction of computer hardware design, and architecture efficiency. I think something radical is going to be on the horizon. I’m not exactly sure what or when, but since I am thinking about it now, I hope I don’t have the same knee-jerk reaction I expect many people to have in the future. What we get in the future may not be compatible with what you have now, but hopefully it will be powerful enough to emulate it.